Working with Log Drains

Log drains collect all of your logs using a service specializing in storing app logs. Learn how to use them with Vercel Integrations here.Log Drains are available on Pro and Enterprise plans

Inspecting logs for the Build Step, Runtime, and Edge Network traffic of deployments is important in understanding the behavior of your application.

Log drains let you debug, monitor, and analyze logs from your applications. When a new log line is created, the logs can be forwarded to archival, search, and alerting services through HTTPS, HTTP, TLS, and TCP.

Log drains on Vercel enable you to:

- Have persisted storage of logs

- Have a large volume of log storage

- Provide the ability to create alerts based on logging patterns

- Generate metrics and graphs from logs data

You can add log drains in two ways:

Log drains are available to all users on the Pro and Enterprise plans. If you are on the Hobby or Pro Trial plan, you'll need to upgrade to Pro to access log drains.

While users on the Hobby or Pro Trial plans can view the log drains settings in the dashboard, they cannot configure or set them up.

Log drains usage is billed based on the pricing table below.

To learn more about Managed Infrastructure on the Pro plan, and how to understand your invoices, see understanding my invoice.

Log drains created on a Hobby plan before 23rd May 2024 can continue to use their current log drains through the integration for free, forever. However, those Hobby users need to upgrade their account to the Pro plan to create new log drains.

See Optimizing log drains for information on how to manage costs associated with log drains.

- From your team's dashboard, select the Settings tab

- Select Log Drains from the list

- In available log drains, select the context menu and click Disable to disable a log drain

- In available log drains, select the context menu and click Remove to remove a log drain

You can also integrate the log drains with third-party logging services. The Integrations Marketplace has numerous logging services for you to integrate with.

To do so, follow these steps:

- From the Vercel dashboard, go to Team Settings > Log Drains and click Add Log Drain.

- Select the Native or Third-party integration you want to use and click Continue.

- Follow the configuration steps provided and choose a project to connect with the service.

- Watch your logs appear on the selected service.

You can easily collect and forward your deployment logs to a third-party logging provider and act accordingly with log drains.

Once integrated, you can view and manage all these logging services also under the Active Log Drains list. You can also find the log drains integration in your list of integrations.

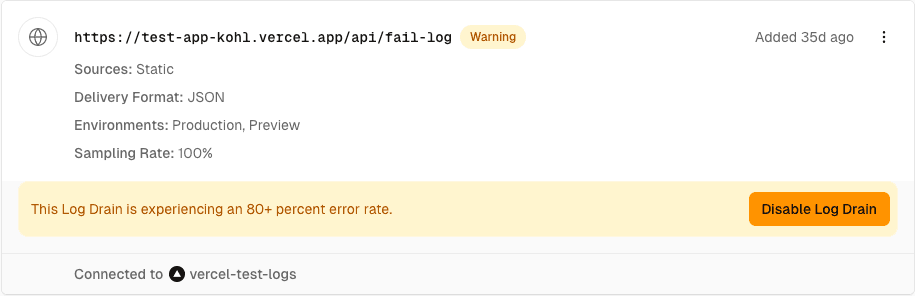

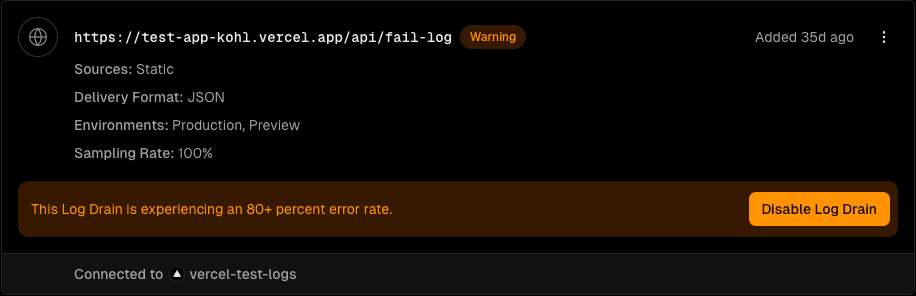

Occasionally your log drain endpoints could return an error. If more than 80% of log drain deliveries return errored status and the number of errored deliveries exceed 50 for the past hour, we will send a notification email and indicate the error status on your Log Drain page.

Managing IP address visibility is available on Pro and Enterprise plans

Those with the owner, admin role can access this feature

Log drains enable you to capture and analyze your logs. Log drains can include public IP addresses in the logs, which may be considered personal information under certain data protection laws. However, you can hide IP addresses in your log drains:

- Go to the Vercel dashboard and ensure your team is selected in the scope selector.

- Go to the Settings tab and navigate to Security & Privacy.

- Under IP Address Visibility, toggle the switch next to off so the text reads IP addresses are hidden in your Log Drains.

This setting is applied team-wide across all projects and log drains.

For more information on log drains and how to use them, check out the following resources:

Was this helpful?